Technology DECODED

Previous edition: 10 May 2024

Share article

Get the full version straight to your inbox.

Exclusive access to our best-in-class data & intelligence

Subscribe now

OpenAI releases new content authentication tools

OpenAI, the startup behind ChatGPT, has released new content authentication tools to help distinguish between real and AI generated content, including watermarks for AI generated voice content.

The tools are part of a wider effort from OpenAI to combat generative AI’s role in online misinformation.

The startup has also formed part of a steering committee at the Coalition for Content Provenance and Authenticity (C2PA) to create a standard metadata for tagging AI generated content.

C2PA metadata will be added to content created by DALLE 3, ChatGPT and OpenAI’s video generator Sora.

In a blog post announcing the new watermarks, OpenAI wrote that it hoped C2PA metadata would help bridge the trust between online users and AI developers.

“As adoption of the standard increases, this [C2PA metadata] can accompany content through its lifecycle of sharing, modification, and reuse,” according to OpenAI.

“Over time, we believe this kind of metadata will be something people come to expect, filling a crucial gap in digital content authenticity practices,” the startup’s blog read.

Alongside its financial backer, Microsoft, OpenAI has also launched a $2m investment fund into educating online users about AI generated content.

This fund includes support from the Older Adults Technology Services, an organisation providing digital training to older people to boost digital equity.

AI generated content’s proliferation online has sparked debate and concern over the many global elections set to take place in 2024. Research and analysis company GlobalData estimates that around four billion people will vote in the next 12 months.

In its blog, OpenAI concluded that responsibility to prevent AI generated misinformation from spreading also landed on content creators and social media sites.

“While technical solutions like the above give us active tools for our defences, effectively enabling content authenticity in practice will require collective action,” it wrote.

Andrew Newell, chief scientific officer at biometric identification company iProove, stated that watermarking AI content, while important, was only part of the solution to tackling deepfakes.

“If deepfakes continue to develop at this pace they will soon erode any faith society once had in audio-visual content, and trust in material from any source, whether genuine or fake, will be destroyed,” he said.

Newell stated that more AI developers and researchers needed to access and use C2PA metadata to create an industry-wide safeguard.

“However, as [C2PA] is rolled out more widely, there is a danger that threat actors use the tool to fine tune their own deep fakes. That’s why there is a need for constant rapid evolution of defences to ensure you stay one step ahead of cybercriminals,” he stated.

Latest news

Analysis: will Microsoft's AI investment pay off?

Microsoft was an early backer of OpenAI, investing $1bn in the startup in 2019. Now, with reports that it is training an AI model bigger than Google Gemini, will Microsoft’s investment in AI finally pay off?

Interview: former Morgan Stanley COO Jim Rosenthal’s BlueVoyant tackles AI cyber risk

Rosenthal talks to Verdict about how his cybersecurity company is helping businesses address generative AI cyber threats.

In data: managed security services will be cybersecurity’s largest sub-segment in 2027

Managed security services will be the largest sub-segment within the cybersecurity market in 2027, according to research and analysis company GlobalData.

Tesla facing US fraud probe for Elon Musk’s misleading autopilot comments

The US Department of Justice is looking into whether Tesla committed wire and securities fraud around CEO Elon Musk’s bold claims about its autopilot vehicle technology, Reuters first reported on Wednesday (8 May).

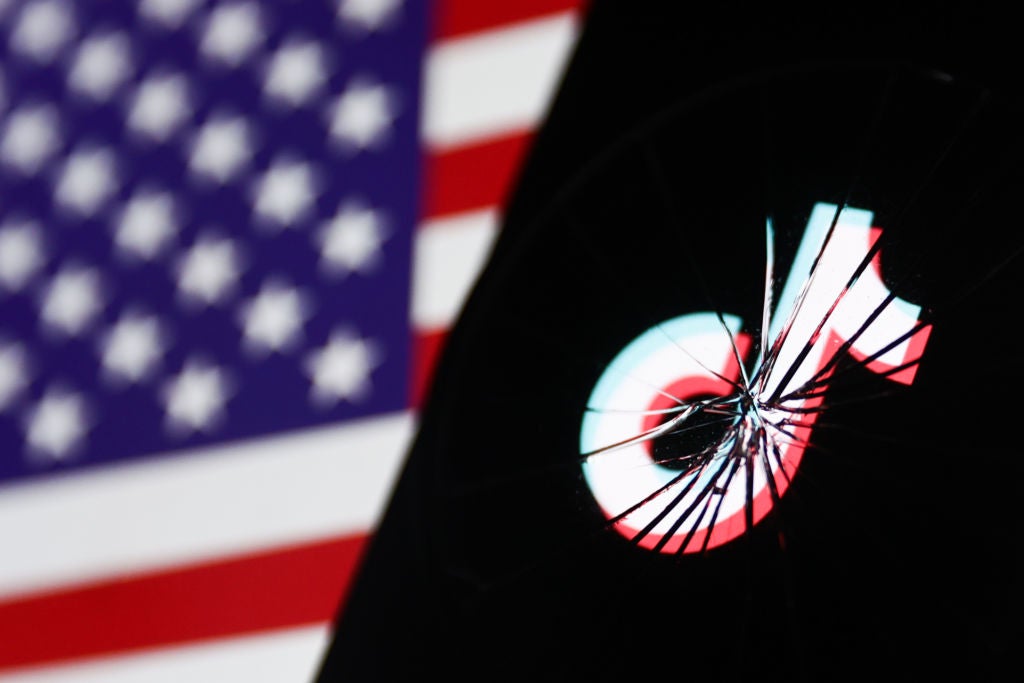

TikTok files lawsuit to block US law that could ban app

TikTok and parent company ByteDance filed a lawsuit on Tuesday (7 May) to block a controversial law signed in by US President Joe Biden that would force the Chinese company to divest its popular short video app or face it being banned in the country.

Q&A: GlobalData's Laura Petrone on the US TikTok ban and the ‘splinternet'

In April, the US signed into law the Protecting Americans from Foreign Adversary Controlled Applications Act. The act forces the sale of TikTok from its parent company ByteDance to a US company to avoid an outright ban.

In our previous edition

Technology Decoded

Analysis: Could UK self-driving unicorn Wayve overtake its competitors?

09 May 2024

Technology Decoded

Speculation mounts about Ministry of Defence IT supply chain following personal data breach

08 May 2024

Technology Decoded

What is no-code AI and why does it matter?

07 May 2024

Newsletters in other sectors

Aerospace, Defence & Security

Automotive

Banking & Payments

Medical Devices

Travel and Tourism

Search companies, themes, reports, as well as actionable data & insights spanning 22 global industries

Access more premium companies when you subscribe to Explorer